Mente.chat

An AI-powered chat application that enables users to interact with and query their personal document database, using a LLM server or an OpenAI API.

Technologies Used

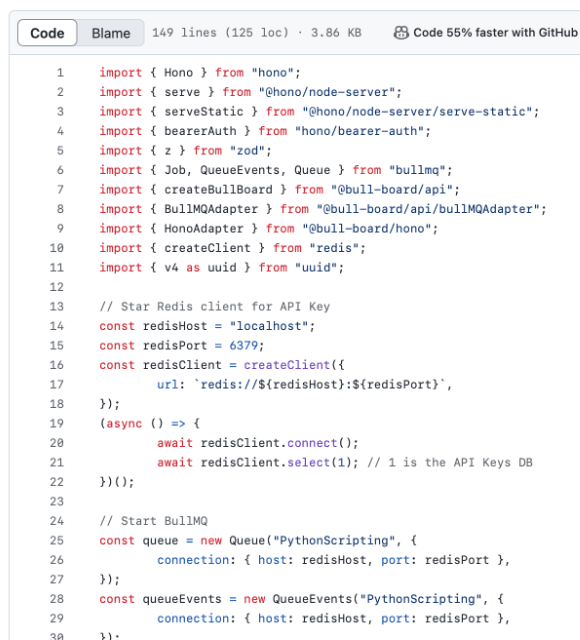

The landing has been built using Astro, focused on deliver a fast website. On the backend, I built several servers. The first, a CloudFlare Worker to handle the API authentication along with Redis, for a faster process. The second, the core server that communicates with a LLM server for AI-powered interactions, using Hono (a Node.js framework), while Qdrant served as the vector database for managing and querying document data.

To address performance concerns and manage resource allocation, I implemented BullMQ for request queue management, which helped in handling the client API calls against the limited resources of the LLM server. The Q&A functionality was built using Python, incorporating libraries for PDF conversion, text splitting, and summarization, forming the AI backend. And finally, for the LLM server, TGI from Hugging Face.

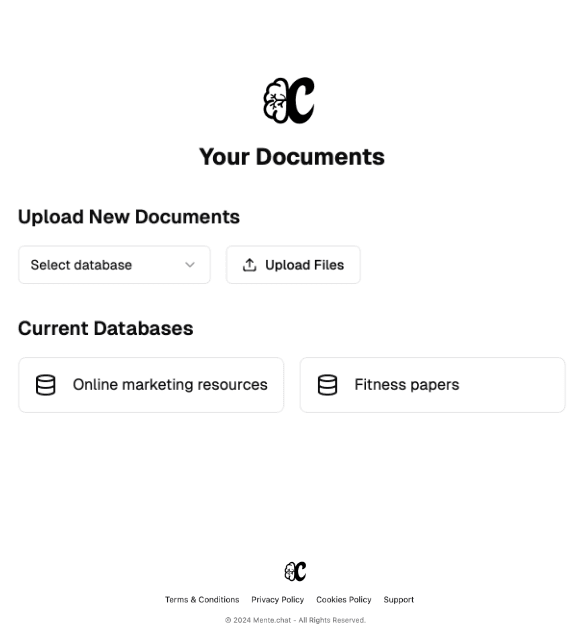

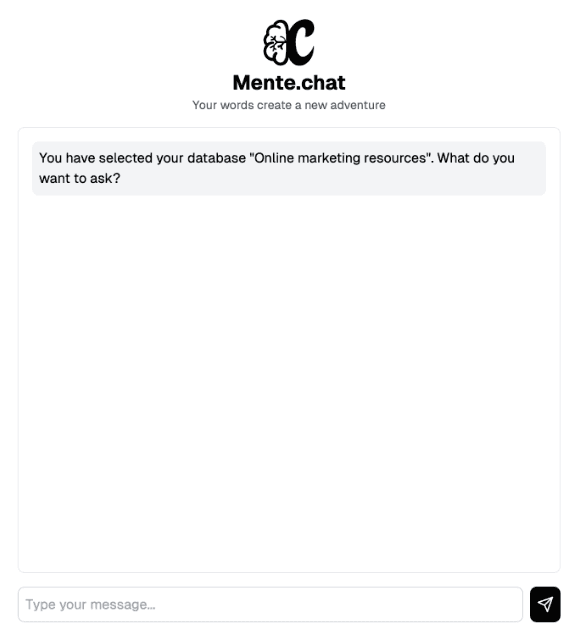

- AI-powered chat UI

- Personal docs query

- Custom LLM server

- OpenAI API integration

- Durable custom database

- Queue management

Challenges

Developing Mente.chat as a solo developer presented significant challenges. The large backend, required a complex architecture and a robust resource management. This included setting up Hono to work seamlessly with the LLM server, and the services of Qdrant, BullMQ and Redis, each serving crucial roles in the App functionality.

One of the major hurdles was efficiently handling client API calls against the limited resources of the LLM server. This led to the implementation of BullMQ for request queuing and Redis for rapid user authentication, solutions that greatly improved the application's performance, and avoided memory leaks and uncompleted requests.

Another significant challenge lay in developing the Q&A system, which involved complex Python scripting for PDF conversion, text splitting, and summarization. At first time I used LangChain for those text, but I realized that I could do it in a simpler way using other Python libraries.

Despite the technical achievements, the project was ultimately discontinued due to the large amount of resources and time required to build a production-ready application, especially for a solo developer like me.